Getting Started

Before you begin, complete steps 1–2 in the First pipeline guide so the binary is on disk and your host meets the prerequisites. Community Edition runs entirely from this single binary—no license key is required to follow the steps below.

1. Start the server

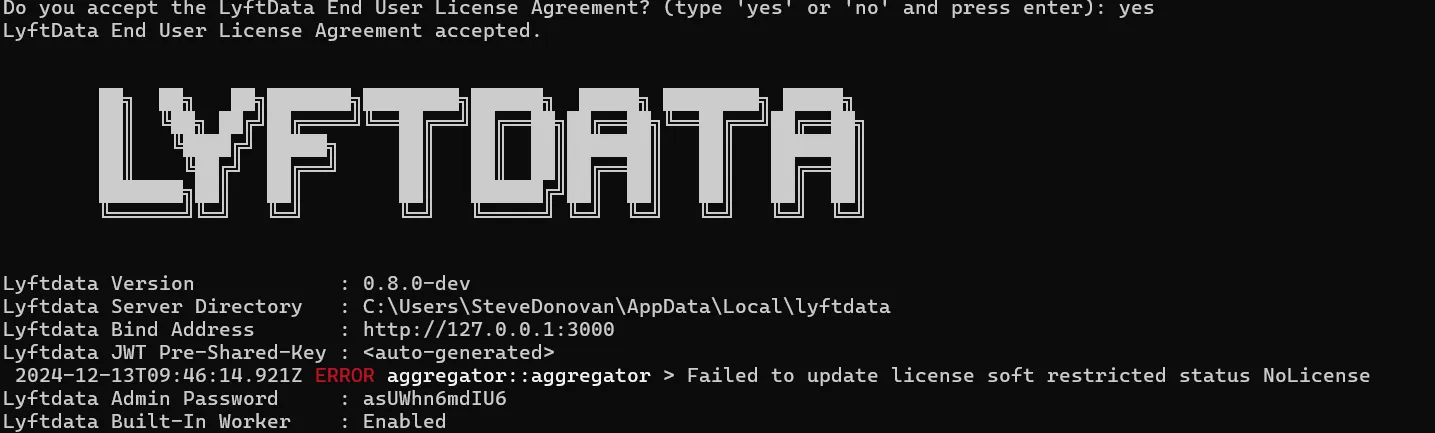

./lyftdata run serverThe first launch prompts you to accept the EULA and prints a one-time admin password.

Copy the password from the terminal log; it is not shown again. By default the server listens on 127.0.0.1:3000. To expose it to your network later, set --bind-address or the LYFTDATA_BIND_ADDRESS environment variable.

2. Sign in and review the dashboard

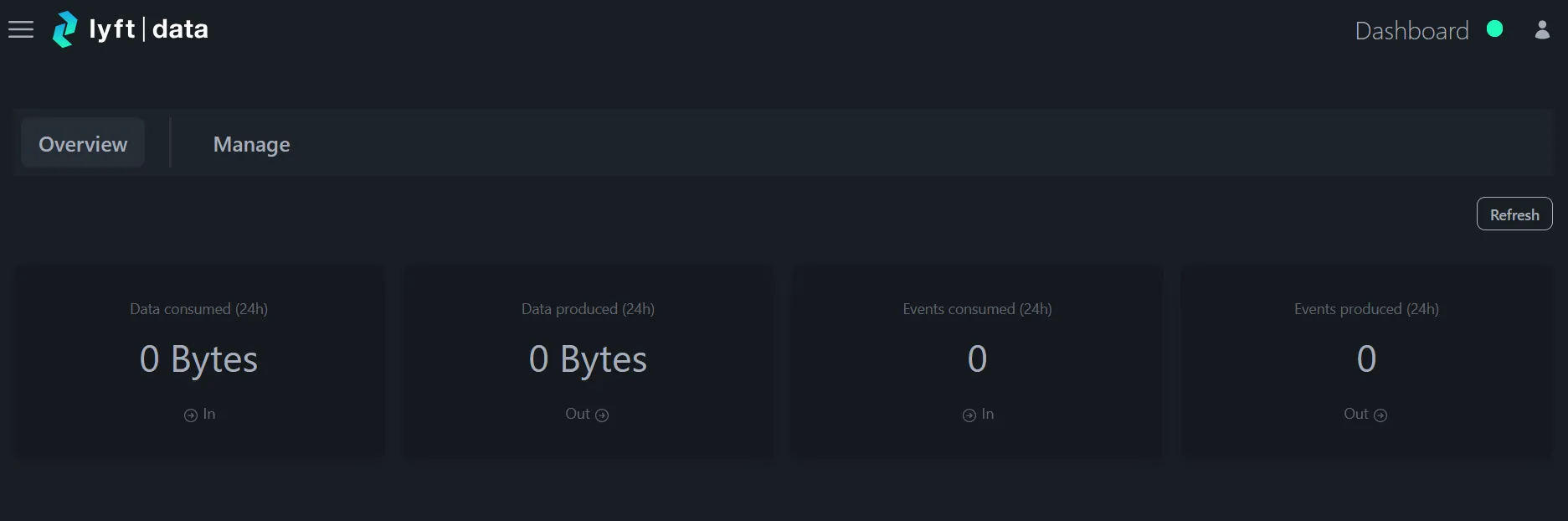

Open http://localhost:3000 in a browser and sign in as admin with the password you just captured. The landing page should be an empty dashboard like the screenshot below.

In Community Edition the server is ready to use immediately: the built-in worker is the only worker available and no license is necessary. If you are preparing for a production rollout, review the Licensing guide to learn how to upgrade and automate activation.

3. Confirm the built-in worker

The built-in worker registers with the server automatically. Verify it is online before building jobs:

- Open Workers in the navigation.

- The list should show the built-in worker as Online with type

builtin.

If you later upgrade with a license, you can add external workers from this page. Follow the platform-specific guides under Install & Configure when you reach that stage.

4. Verify connectivity

Before building jobs, make sure the control plane and built-in worker see each other:

- The dashboard should list the built-in worker with a green status badge.

curl -s http://localhost:3000/api/workers | jq '.[].status'should return"online".- The Issues panel on the dashboard should be empty.

5. Next stop: build a pipeline

With the server running and the built-in worker online you are ready to author jobs. Continue with Running a Job to create your first pipeline and learn how staging, running, and deploying workflows fit together.